OpenAI Launches GPT OSS-120b and GPT OSS-20b: Know Features, Specs & Where to Download Open-Weight AI Models?

OpenAI has officially released two powerful open-weight AI models-GPT OSS-120b and GPT OSS-20b-marking a major milestone in the open-source AI space. These are the first open-weight models from OpenAI since the release of GPT-2 over five years ago.

GPT OSS-120b and GPT OSS-20b Launched: Where and How To Download?

The models are now available across multiple platforms, including Hugging Face, Databricks, Azure, and AWS, making them easily accessible to a wide range of users. Released under the flexible Apache 2.0 license, the models are open for full commercial use, allowing businesses to integrate them into proprietary products, services, or systems without licensing restrictions.

Features of GPT OSS Models

The models come in two sizes, one with 120 billion parameters and the other with 20 billion parameters. Despite the scale, the larger 120b model is optimised for efficient deployment and is capable of running on a single Nvidia GPU, offering performance comparable to OpenAI's o4-mini model.

The 20b version, meanwhile, is even more efficient-requiring just 16GB of RAM-and is aligned with the performance of the o3-mini model. This accessibility broadens the range of use cases and hardware on which these models can be deployed.

One of the standout features of the GPT OSS models is their support for local deployment and offline functionality. These models can run entirely on local systems without the need for an internet connection or external servers, offering enhanced privacy, security, and independence. This capability is particularly valuable for businesses and developers with strict data governance requirements or limited internet connectivity.

The underlying architecture of these models is based on a Mixture-of-Experts (MoE) framework. This advanced setup enables the model to activate only a small portion of its parameters during inference-approximately 5.1 billion parameters per token in the 120b version. This selective activation significantly improves computational efficiency, reduces latency, and lowers hardware demands.

After their initial pretraining, both models undergo intensive reinforcement learning using substantial computational resources. This stage of training helps sharpen their reasoning and task-following abilities, ensuring performance consistency with OpenAI's proprietary o-series models. As a result, these models are not only efficient but also robust in delivering accurate and nuanced outputs.

OpenAI also announced that these models integrate seamlessly with its Responses API, supporting smooth incorporation into agent-based systems. They are capable of following detailed instructions, running Python code, conducting web searches, and providing structured reasoning across tasks. The models adjust their reasoning based on task complexity, making them suitable for both low-latency operations and more involved, multi-step processes.

Moreover, the GPT OSS models support chain-of-thought reasoning, allowing for coherent, step-by-step output generation in tasks that demand logic, planning, or structured formats. Their customisation potential makes them ideal for a variety of applications across industries-from enterprise automation and data analysis to customer service and intelligent agent development.

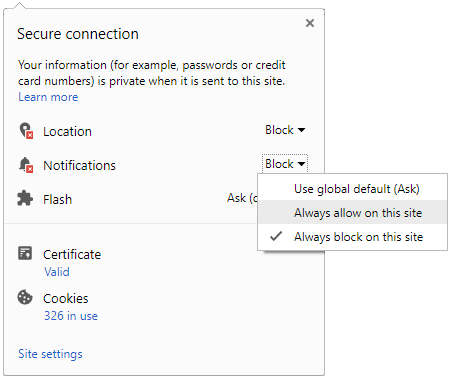

Click it and Unblock the Notifications

Click it and Unblock the Notifications