Curious About AI's Energy Use? Learn How It Powers Your Questions!

Research indicates that generative AI tools incur significant hidden environmental costs, with higher energy consumption and carbon emissions from advanced language models. Users can reduce their carbon footprint by selecting efficient models and advocating for transparency in AI's environmental impact.

Generative AI tools, like those used for drafting emails or wedding vows, have become essential in many lives. However, research indicates that these tools come with hidden environmental costs. Each AI prompt is converted into numerical clusters and processed in large data centres powered by fossil fuels. This process can consume up to ten times more energy than a typical Google search.

Researchers in Germany examined 14 large language model (LLM) AI systems to assess their environmental impact. They found that complex questions resulted in up to six times more carbon emissions compared to simpler queries. Additionally, advanced LLMs with enhanced reasoning capabilities emitted up to 50 times more carbon than basic models when answering identical questions.

Energy Consumption and Model Performance

"This shows us the tradeoff between energy consumption and the accuracy of model performance," stated Maximilian Dauner, a doctoral student at Hochschule München University of Applied Sciences. These advanced LLMs possess billions more parameters than simpler models, akin to neural networks in the brain with numerous connections facilitating complex thinking.

Dauner explained that complex questions demand more energy due to the detailed explanations many AI models are trained to provide. For instance, an AI chatbot solving an algebra problem might elaborate on its solution process. "AI expends a lot of energy being polite," Dauner noted, suggesting users be concise when interacting with AI models.

Choosing Efficient Models

Sasha Luccioni from Hugging Face highlighted that not all AI models are equal in terms of environmental impact. Users can reduce their carbon footprint by selecting task-specific models, which are often smaller and more efficient. For example, a software engineer might need a coding-specific model, while a student could use simpler tools for homework assistance.

Luccioni also recommended reverting to basic resources like online encyclopedias for simple tasks instead of relying on powerful AI tools unnecessarily. Within the same company, different AI models may vary in reasoning power; hence users should research which best suits their needs.

The Challenge of Measuring Impact

Quantifying AI's environmental impact is challenging due to varying factors like proximity to energy grids and hardware differences. Many companies don't disclose details about their energy consumption or server specifications, complicating accurate estimations.

Shaolei Ren from the University of California noted that it's not feasible to generalize AI's average energy or water consumption without examining individual models and tasks. Transparency from companies regarding carbon emissions per prompt could help users make informed decisions about their usage.

The Push for Transparency

Dauner suggested that if people were aware of the environmental cost associated with generating responses, they might reconsider unnecessary uses of AI tools. As companies integrate generative AI into various technologies, consumers may have limited control over its usage.

Luccioni expressed frustration over the rush to incorporate generative AI into every technology despite its environmental consequences. With limited information on resource usage and unlikely regulatory pressures for transparency in the US soon, consumers face challenges in making eco-conscious choices.

Ren remains optimistic about future improvements in resource efficiency within the industry. He emphasised that while other sectors also consume significant energy, it doesn't diminish the importance of addressing AI's environmental impact.

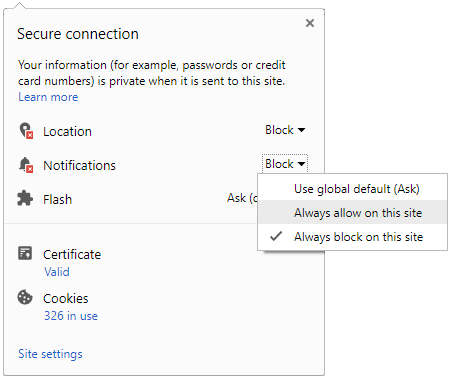

Click it and Unblock the Notifications

Click it and Unblock the Notifications