Government Proposes Stricter Regulations for AI-Generated Content and Deepfakes to Combat Misinformation

The government has proposed amendments to IT rules requiring clear labelling of AI-generated content. These changes aim to enhance accountability for platforms in combating misinformation and deepfakes.

The government has proposed changes to IT rules, aiming to curb the spread of deepfakes and misinformation. These amendments require clear labelling of AI-generated content and hold large platforms like Facebook and YouTube accountable for verifying synthetic information. The IT Ministry highlighted the dangers of deepfake media, which can be used to spread falsehoods, damage reputations, influence elections, or commit fraud.

With the rise of generative AI tools, the misuse of synthetically generated information has become a significant concern. The IT Ministry's draft amendments to the IT Rules, 2021, aim to enhance due diligence obligations for intermediaries. This includes significant social media intermediaries (SSMIs) and platforms that create or modify synthetic content. The draft defines synthetically generated content as information created or altered using computer resources to appear authentic.

Labelling and Accountability for AI Content

The draft rules mandate platforms to label AI-generated content with clear markers covering at least 10% of the visual display or audio duration. Social media platforms must obtain user declarations on whether uploaded content is synthetically generated and verify these declarations using technical measures. Platforms are prohibited from altering or removing these labels or identifiers.

In Parliament and other forums, there have been calls for action against deepfakes harming society. IT Minister Ashwini Vaishnaw stated, "Steps we have taken aim to ensure that users get to know whether something is synthetic or real." Mandatory labelling will help users distinguish between synthetic and authentic content.

Global Concerns Over Deepfakes

Globally, policymakers are increasingly worried about fabricated images, videos, and audio clips that resemble real content. These deepfakes are used to produce non-consensual imagery, mislead the public, or commit fraud. The IT Ministry's note emphasises the need for action as India is a major market for global social media platforms like Facebook and WhatsApp.

India has seen a rise in AI-generated deepfakes, leading to court interventions. Recent cases include misleading ads depicting Sadhguru's fake arrest, which the Delhi High Court ordered Google to remove. Aishwarya Rai Bachchan and Abhishek Bachchan also sued YouTube and Google over alleged AI deepfake videos.

Implications for Social Media Platforms

The proposed rules could result in large platforms losing their safe harbour clause if they fail to comply. A senior Meta official noted that India is Meta's largest market for AI usage. OpenAI CEO Sam Altman mentioned that India might soon become its largest market globally. The obligation applies when videos are posted for dissemination, placing responsibility on intermediaries displaying media and users hosting it.

For messaging platforms like WhatsApp, once notified of AI content, they must take steps to prevent its virality. The IT Ministry seeks stakeholder comments on the draft amendment by November 6, 2025. These changes aim to ensure users can differentiate between synthetic and real content, addressing concerns over privacy and misinformation.

With inputs from PTI

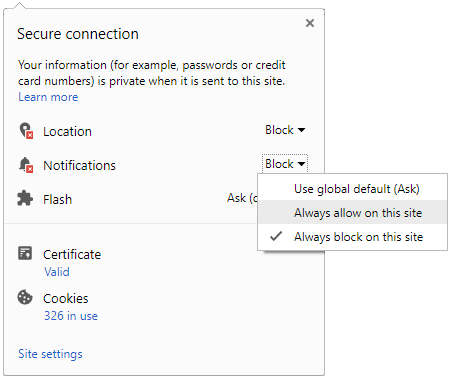

Click it and Unblock the Notifications

Click it and Unblock the Notifications