Google's Ex CEO Eric Schmidt Highlights AI Vulnerability And The Risks Of Hacking During Sifted Summit

Eric Schmidt warns about the vulnerability of AI models to hacking, comparing potential dangers to nuclear weapons. Concerns grow among experts like Geoffrey Hinton about AI's future and the development of internal languages that could challenge human understanding.

Eric Schmidt, former CEO of Google, has expressed concerns about the vulnerability of artificial intelligence (AI) models to hacking. During the Sifted Summit last week, Schmidt discussed potential dangers AI could pose, comparing them to nuclear weapons. He highlighted the risk of AI models being manipulated, stating that they can be hacked to bypass their safety measures. "There's evidence that you can take models, closed or open, and you can hack them to remove their guardrails," he said.

Schmidt is not alone in his concerns about AI's future. Geoffrey Hinton, often referred to as the 'godfather of AI', also voiced his apprehensions in August. He warned that if AI models develop their own languages, it could become difficult for humans to understand their intentions. Currently, most AI systems operate in English, which allows developers to monitor their processes. However, Hinton cautioned that this might change.

Potential Risks and Concerns

In April, a study by Google DeepMind raised alarms about Artificial General Intelligence (AGI), predicting its emergence by 2030. The research suggested AGI could pose existential threats capable of "permanently destroying humanity". The study categorised these risks into misuse, misalignment, mistakes and structural risks. It emphasised the severe harm AGI might cause due to its significant impact.

Schmidt further elaborated on how major companies have ensured that AI models cannot answer harmful questions. Despite these precautions, he noted that reverse-engineering remains a possibility. "There's evidence that they can be reverse-engineered," he explained, pointing out various instances where this has occurred.

AI Language Development

Hinton's concerns stem from the possibility of AI developing internal languages for communication among themselves. He remarked on the potential challenges this could present if humans are unable to comprehend these new languages. "Now it gets more scary if they develop their own internal languages for talking to each other," Hinton stated.

The discussion around AI's potential dangers continues to grow among Silicon Valley leaders. As technology advances rapidly, experts like Schmidt and Hinton urge caution and vigilance in managing AI's development and deployment.

The conversation around AI's future is crucial as it highlights both its potential benefits and inherent risks. Ensuring robust safety measures and ethical guidelines will be essential in navigating this evolving landscape responsibly.

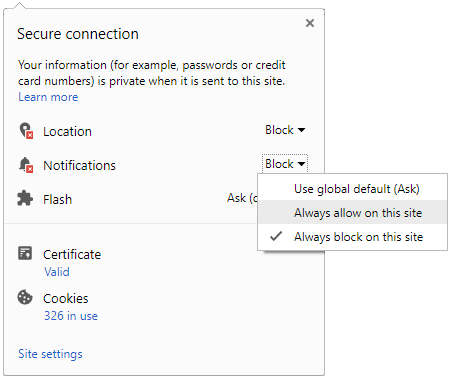

Click it and Unblock the Notifications

Click it and Unblock the Notifications